Organizing scRNA-seq data with LaminDB

Occasionally, when I’m curious about something scRNA-seq related, I analyze some data. Often this leads to posts on this website.

I have made it a habit to try to find some recent, somewhat interesting, scRNA-seq data for these kinds of analysis. This is because I don’t want to end up mentally overfitting to a particular dataset, and I also consider it a way to keep up with how the scale and complexity of datasets are changing over time.

Over the years, I have ended up collecting a large number of scRNA-seq datasets. I did a global search for ‘*.h5ad’ on my Google Drive, and found over 300 files in various directories scattered throughout my drive.

Due to the increasing popularity and scale of scRNA-seq experiments, products for organizing large collections of data, such as LaminDB.

LaminDB is designed for tracking biological data in general. It offers plugins to manage ontologies related to scRNA-seq data as well as for AnnData format data.

The LaminDB database tracks metadata about the data, as well as the location of the data. It can track data locally on a computer, but it is probably most useful when storing your data on cloud storage.

After filtering duplicates and non-public H5ADs from my list, I ingested all my H5ADs into LaminDB, while also spending some time to hunt down the original publications for all the datasets and tag them with the DOI of the source publication for each dataset.

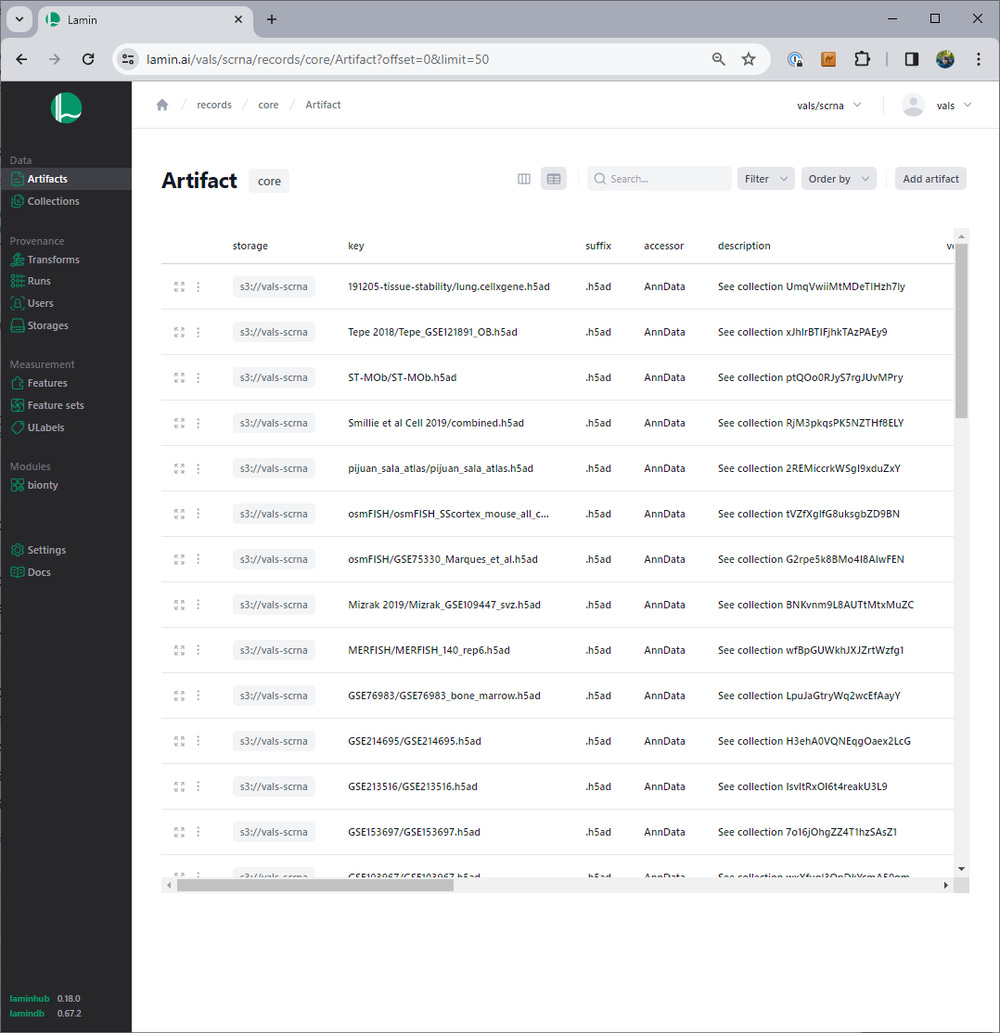

LaminDB is organized around ‘artifacts’ and ‘collections’. Each H5AD file is considered an ‘artifact’, which can be annotated with ‘features’ and ‘labels’.

Since each artifact (H5AD file) has an article DOI associated, I was able to use the single cell studies database to add some further annotation to the files: organism, tissue, and technique associated with the DOI.

Features and labels can be arbitrarily registered, and you can build your own ontologies to organize your data. For single-cell genomics data, Lamin provides a plugin, ‘bionty’, with pre-defined labels and features using relevant public ontologies, including organisms and tissues. The data from the single cell studies database was used to create these labels for the artifacts.

The LaminDB database can be explored on lamin.ai, and can be loaded by running lamin load vals/scrna in the terminal. In my case, the database is hosted on AWS. After running the load command, the database is directly accessible in Python through the ‘lamindb’ package, which is typically imported as ln.

For example, we can count how many files are tracked by the database.

> ln.Artifact.filter().all().count()

236These are the 236 H5AD files I ingested to the database after deduplication. This deduplication happened automatically as I ingested files based on the hash of the file contents.

Files of interest can be identified by filtering based on metadata (which in this case is on the publication level).

> organisms = lb.Organism.lookup()

> tissues = lb.Tissue.lookup()

> techniques = lb.ExperimentalFactor.lookup()

> query = (

ln.Artifact

.filter(organism = organisms.mouse)

.filter(tissues = tissues.brain)

.filter(experimental_factors = techniques.chromium)

)

> print(query.df().head())

uid storage_id

id

15 88k1xpSWBdJzEbYUf6MZ 1 \

16 UDH0CAo3JHo0uQUiAtUV 1

21 kFvwhKtIBY0mhQTOqaOX 1

22 PBAwUla9eERbI1KJQADZ 1

23 HW9uS90M2PVyeX50o6ct 1

key suffix accessor

id

15 cortex-align-2018/Zeisel 2018/l1_cortex2.h5ad .h5ad AnnData \

16 cortex-align-2018/Zeisel 2018/l1_cortex3.h5ad .h5ad AnnData

21 hca-benchmark-2019/Ding/2019-05-Ding-et-al-bio... .h5ad AnnData

22 hca-benchmark-2019/Ding/PBMC/PBMC_read_counts.... .h5ad AnnData

23 hca-benchmark-2019/Ding/PBMC/PBMC_umi_counts.h5ad .h5ad AnnData

description version size hash hash_type n_objects

id

15 None None 77363974 SlY49Cv9vnMGofdSCMOj90 sha1-fl None \

16 None None 64354637 ZVFW2H_B1ZlD9KK_WKS11T sha1-fl None

21 None None 224711660 e7bzC5ZhAV-e_swr_6jQie sha1-fl None

22 None None 307531280 DaQIiOBzYf5VjXmOmWE1tQ sha1-fl None

23 None None 296120754 aKSloGAGbodFRA8PlBR1Vz sha1-fl None

n_observations transform_id run_id visibility key_is_virtual

id

15 None None None 1 True \

16 None None None 1 True

21 None None None 1 True

22 None None None 1 True

23 None None None 1 True

created_at updated_at

id

15 2023-11-06 07:03:39.184199+00:00 2024-01-21 07:04:35.258135+00:00 \

16 2023-11-06 07:04:08.454681+00:00 2024-01-21 07:04:38.216085+00:00

21 2023-11-06 07:16:59.839767+00:00 2024-01-21 07:06:08.275496+00:00

22 2023-11-06 16:19:26.830698+00:00 2024-01-21 07:06:18.767072+00:00

23 2023-11-06 16:20:20.747104+00:00 2024-01-21 07:06:29.318662+00:00

created_by_id

id

15 1

16 1

21 1

22 1

23 1Artifacts, here representing H5AD files, contain a lot of different metadata that relates to provenance, meaning the source and transformations that have occurred for the file, as well as annotations added to the files.

> artifact = query.first()

> artifact.describe()

Artifact(uid='88k1xpSWBdJzEbYUf6MZ', key='cortex-align-2018/Zeisel 2018/l1_cortex2.h5ad', suffix='.h5ad', accessor='AnnData', size=77363974, hash='SlY49Cv9vnMGofdSCMOj90', hash_type='sha1-fl', visibility=1, key_is_virtual=True, updated_at=2024-01-21 07:04:35 UTC)

Provenance:

🗃️ storage: Storage(uid='3wVRAheC', root='s3://vals-scrna', type='s3', region='us-west-1', updated_at=2023-11-05 20:36:19 UTC, created_by_id=1)

👤 created_by: User(uid='8joZB4lw', handle='vals', updated_at=2023-11-05 20:36:19 UTC)

⬇️ input_of (core.Run): ['2024-01-25 15:18:34 UTC']

Features:

external: FeatureSet(uid='b20JpuwZ5gfBUAvcS8jP', n=3, registry='core.Feature', hash='iKuEmOyrwt4opSDzC-dF', updated_at=2024-01-21 07:29:48 UTC, created_by_id=1)

🔗 organism (1, bionty.Organism): 'mouse'

🔗 tissue (1, bionty.Tissue): 'brain'

🔗 technique (1, bionty.ExperimentalFactor): 'Chromium'

Labels:

🏷️ organism (1, bionty.Organism): 'mouse'

🏷️ tissues (1, bionty.Tissue): 'brain'

🏷️ experimental_factors (1, bionty.ExperimentalFactor): 'Chromium'Since LaminDB has native support for H5AD files and AnnData objects, the contents of the artifact, as represented by a link to an H5AD file, can be loaded to the local Python session from the cloud storage it is stored in.

> adata = artifact.load()

> adata

AnnData object with n_obs × n_vars = 20811 × 27998

obs: 'Age', 'AnalysisPool', 'AnalysisProject', 'CellConc', 'Cell_Conc', 'ChipID', 'Class', 'ClassProbability_Astrocyte', 'ClassProbability_Astrocyte,Immune', 'ClassProbability_Astrocyte,Neurons', 'ClassProbability_Astrocyte,Oligos', 'ClassProbability_Astrocyte,Vascular', 'ClassProbability_Bergmann-glia', 'ClassProbability_Blood', 'ClassProbability_Blood,Vascular', 'ClassProbability_Enteric-glia', 'ClassProbability_Enteric-glia,Cycling', 'ClassProbability_Ependymal', 'ClassProbability_Ex-Neurons', 'ClassProbability_Ex-Vascular', 'ClassProbability_Immune', 'ClassProbability_Immune,Neurons', 'ClassProbability_Immune,Oligos', 'ClassProbability_Neurons', 'ClassProbability_Neurons,Cycling', 'ClassProbability_Neurons,Oligos', 'ClassProbability_Neurons,Satellite-glia', 'ClassProbability_Neurons,Vascular', 'ClassProbability_OEC', 'ClassProbability_Oligos', 'ClassProbability_Oligos,Cycling', 'ClassProbability_Oligos,Vascular', 'ClassProbability_Satellite-glia', 'ClassProbability_Satellite-glia,Cycling', 'ClassProbability_Satellite-glia,Schwann', 'ClassProbability_Schwann', 'ClassProbability_Ttr', 'ClassProbability_Vascular', 'Clusters', 'Comments', 'DateCaptured', 'Date_Captured', 'DonorID', 'Flowcell', 'Fraction Reads in Cells', 'Label', 'Mean Reads per Cell', 'Median Genes per Cell', 'MitoRiboRatio', 'NGI_PlateWell', 'NumPooledAnimals', 'Num_Pooled_Animals', 'Number of Reads', 'Outliers', 'PCRCycles', 'PCR_Cycles', 'PassedQC', 'PlugDate', 'Plug_Date', 'Project', 'Q30 Bases in Barcode', 'Q30 Bases in UMI', 'Reads Mapped Confidently to Exonic Regions', 'Reads Mapped Confidently to Intergenic Regions', 'Reads Mapped Confidently to Intronic Regions', 'Reads Mapped Confidently to Transcriptome', 'SampleID', 'SampleIndex', 'SampleOK', 'Sample_Index', 'SeqComment', 'SeqLibDate', 'SeqLibOk', 'Seq_Comment', 'Seq_Lib_Date', 'Seq_Lib_Ok', 'Serial_Number', 'Sex', 'Species', 'Strain', 'Subclass', 'TargetNumCells', 'Target_Num_Cells', 'TimepointPool', 'Tissue', 'Transcriptome', 'Valid Barcodes', '_KMeans_10', '_LogCV', '_LogMean', '_NGenes', '_Total', '_Valid', '_X', '_Y', '_tSNE1', '_tSNE2', 'cDNAConcNanogramPerMicroliter', 'cDNALibOk', 'cDNA_Lib_Ok', 'ngperul_cDNA', 'ClusterName', 'Description', 'Probable_location'

var: 'Accession', '_LogCV', '_LogMean', '_Selected', '_Total', '_Valid'Say we want to look at some human 10x Chromium data, but we’re not sure which tissue, or even what tissues are represented in the database. We can query the database and investigate other pieces of metadata for the results.

> query = (

ln.Artifact

.filter(organism = organisms.human)

.filter(experimental_factors = techniques.chromium)

)

> (

query

.df(include = 'tissues__name')

.explode('tissues__name')

.groupby(['tissues__name'])

.size()

.sort_values(ascending = False)

.head(5)

)

tissues__name

blood 39

colon 35

cell culture 30

brain 3

lung 3

dtype: int64Based on results, we decide that blood would be an interesting tissue for our current question.

> query = (

ln.Artifact

.filter(organism = organisms.human)

.filter(tissues = tissues.blood)

.filter(experimental_factors = techniques.chromium)

)

> print(query.df().head())

uid storage_id

id

90 UWETAZbzAURlLQCyTV1d 1 \

10 ZbAhp5ASiaOaKBqpOFQ2 1

11 bEIwnqnBRf3ewAjRzNOY 1

24 S2hi2e0q1t0rr2KL667H 1

25 GZN0nW18UUQ1JOfUPyQR 1

key suffix accessor

id

90 Boland et al/GSE125527.h5ad .h5ad AnnData \

10 220222 Exploratory analysis with scVI/GSE13826... .h5ad AnnData

11 220222 Exploratory analysis with scVI/GSE13826... .h5ad AnnData

24 hca-benchmark-2019/h5ads/10X2x5Kcell250Kreads_... .h5ad AnnData

25 hca-benchmark-2019/h5ads/10X2x5Kcell250Kreads_... .h5ad AnnData

description version size hash hash_type

id

90 None None 526865612 5c33pdXfI7munQb_UJV8bO sha1-fl \

10 None None 1246663095 hFW_l02ns5NOOE9pCOX64h sha1-fl

11 None None 619244593 Fj40Pif5bUgrAt4vllmHY2 sha1-fl

24 None None 232265080 yZysuTc6RctVHhd2E2H3lc sha1-fl

25 None None 245881240 w9nksKG3qtOHaKmYxNYmge sha1-fl

n_objects n_observations transform_id run_id visibility key_is_virtual

id

90 None None None None 1 True \

10 None None None None 1 True

11 None None None None 1 True

24 None None None None 1 True

25 None None None None 1 True

created_at updated_at

id

90 2023-11-07 04:42:48.631068+00:00 2024-01-21 07:04:08.645070+00:00 \

10 2023-11-06 06:58:09.539943+00:00 2024-01-21 07:04:00.774051+00:00

11 2023-11-06 06:59:46.752959+00:00 2024-01-21 07:04:04.738173+00:00

24 2023-11-06 16:25:39.070645+00:00 2024-01-21 07:06:44.435484+00:00

25 2023-11-06 16:26:15.070279+00:00 2024-01-21 07:06:59.559525+00:00

created_by_id

id

90 1

10 1

11 1

24 1

25 1Then we can have a closer look at the first result, and load the data.

> artifact = query.first()

> artifact.describe()

Artifact(uid='UWETAZbzAURlLQCyTV1d', key='Boland et al/GSE125527.h5ad', suffix='.h5ad', accessor='AnnData', size=526865612, hash='5c33pdXfI7munQb_UJV8bO', hash_type='sha1-fl', visibility=1, key_is_virtual=True, updated_at=2024-01-21 07:04:08 UTC)

Provenance:

🗃️ storage: Storage(uid='3wVRAheC', root='s3://vals-scrna', type='s3', region='us-west-1', updated_at=2023-11-05 20:36:19 UTC, created_by_id=1)

👤 created_by: User(uid='8joZB4lw', handle='vals', updated_at=2023-11-05 20:36:19 UTC)

⬇️ input_of (core.Run): ['2024-01-25 15:18:34 UTC']

Features:

external: FeatureSet(uid='b20JpuwZ5gfBUAvcS8jP', n=3, registry='core.Feature', hash='iKuEmOyrwt4opSDzC-dF', updated_at=2024-01-21 07:29:48 UTC, created_by_id=1)

🔗 organism (1, bionty.Organism): 'human'

🔗 tissue (2, bionty.Tissue): 'rectum', 'blood'

🔗 technique (1, bionty.ExperimentalFactor): 'Chromium'

Labels:

🏷️ organism (1, bionty.Organism): 'human'

🏷️ tissues (2, bionty.Tissue): 'rectum', 'blood'

🏷️ experimental_factors (1, bionty.ExperimentalFactor): 'Chromium'

> adata = artifact.load()

> adata

AnnData object with n_obs × n_vars = 68627 × 10160

obs: 'cell_id', 'patient_assignment', 'tissue_assignment', 'disease_assignment', 'celltype'

> print(adata.obs)

cell_id patient_assignment tissue_assignment

AAACCTGCATGGTAGG-1 1 C1 R \

AAACGGGAGATAGGAG-1 2 C1 R

AACACGTGTGTGCCTG-1 3 C1 R

AACCGCGGTTCAGACT-1 4 C1 R

AACCGCGTCTCGCATC-1 5 C1 R

... ... ... ...

AAAGATGAGAATGTGT-30 68623 C9 PBMC

CGACTTCGTGACTACT-30 68624 C9 PBMC

GTATTCTCAAGCCATT-30 68625 C9 PBMC

TGCCCATAGTTGCAGG-30 68626 C9 PBMC

TGGTTCCAGACGCACA-30 68627 C9 PBMC

disease_assignment celltype

AAACCTGCATGGTAGG-1 healthy T

AAACGGGAGATAGGAG-1 healthy T

AACACGTGTGTGCCTG-1 healthy T

AACCGCGGTTCAGACT-1 healthy T

AACCGCGTCTCGCATC-1 healthy T

... ... ...

AAAGATGAGAATGTGT-30 healthy T

CGACTTCGTGACTACT-30 healthy T

GTATTCTCAAGCCATT-30 healthy T

TGCCCATAGTTGCAGG-30 healthy T

TGGTTCCAGACGCACA-30 healthy T

[68627 rows x 5 columns]Previously, my workflow would have been to remember that at some point I downloaded some human data with blood, remembered if I used it in a blog post, and hopefully a copy of the data would be stored in my Google Drive folder for that post. With the LaminDB database, all data I have used previously is instantly accessible.

At this point, I have only ingested H5AD files and annotated them. LaminDB also supports tracking metadata for all the single cells and genes in the H5AD files using standardized ontologies. This seems extremely useful for searching for data of interest, and keeping it harmonized, but would require quite a lot of work for my current collection.

Even just using the tracking of H5AD files, tracking the files with the database will be a far better solution than my many Google Drive folders.

Alex Wolf and Sunny Sun of Lamin helped me with adding annotations to my artifacts, as well as provided feedback on this post, and Lamin offered to host my collection so it can be made available publicly through lamin.ai. Notebooks with code related to this work are available on GitHub.