Reverse Differential Expression for cell type markers

Differential Expression

If you have two types of cells, you might want to find what molecular features distinguish them from each other. A popular assay for this is RNA-sequencing. If you measure the RNA from different genes in the two cell types, you can identify which RNAs are more abundant in one cell type or the other. This is known as differential expression (DE) analysis, and we usually say that genes are upregulated or downregulated depending if they are more or less abundant. (I'd argue "enriched" or "depleted" would be better terms, because "regulated" suggests some causality you're not measuring.)

Abstracting away many details about normalisation and data noise, say \( x^g \) is the gene expression, and \( y \) be and indicator of cell type such that \( y = 1 \) for one, and \( y = -1 \) for the other. In differential expression analysis, for every gene \( g \) we investigate the relation $$ x^g = \beta_0^g + \beta_1^g \cdot y + \varepsilon $$

with regards to the data, and ask the question of whether \( \beta_1^g \) is different from zero in a meaningful way.

To make the example more concrete, let's consider the data from Velten et al, where the authors studied mES cells (\( N = 96 \)) and NS cells (\( N = 48 \)). Say that \( y = 1 \) for mESC, and \( y = -1 \) for NSC. For example, if \( \beta_1^g \) is postive the gene is more abundant in mESCs, and the magnitude of \( \beta_1^g \) is the effect size.

For this simple example, let's investigate 200 genes from the data (selected by having high variance) with expression on a log scale. For the sake of simplicity, let's assume normal distributed noise \( \varepsilon \sim \mathcal{N}(0, \sigma^2_g) \).

The model described above can be implemented in Stan in the following way

data {

int<lower=0> N;

int<lower=0> G;

matrix[N, G] X;

vector[N] y;

}

parameters {

vector[G] beta0;

vector[G] beta;

real<lower=0> sigma[G];

}

model {

beta ~ normal(0, 1.);

beta0 ~ normal(0, 1.);

for (i in 1:G) {

col(X, i) ~ normal(beta0[i] + y * beta[i], sigma[i]);

}

}(To keep it simple, we collect all the genes in a matrix and analyse them all at once).

Running the model, we obtain samples from the posterior distribution of the Effect size of each gene (\( \beta_1^g \)). We plot the mean of this, with 95% confidence intervals (CI).

Several of the 200 genes have effect sizes such that the CI is far away from 0. A handy way to quantify the uncertainty of the effect sizes is to invsetigate the probability of the effect size being 0, let's call this a P-value. A simple way to do that in this setting is $$ P = \min( p(\beta_1^g < 0 | y, x^g), p(-\beta_1^g < 0 | y, x^g) ). $$

In other words, we just count how many of the posterior samples are on the wrong side of 0 for the effect size. Comparing the effect size with the P-value is known as a volcano plot.

In this case we drew 2,000 samples, which puts a limit to the smallest P-value we can observe as 1 / 2,000, causing the plateau in the figure.

Reverse Differential Expression

The reason I'm writing about this, is that I had a conversation with Tomás about this in relation to our notion of cell types.

It's kind of backwards!

We had the cell types, and then investigated which genes were expressed in the cells. In essence, from a machine learning perspective, we are assessing if the cell type label can predict the gene expression. But what we want to do is investigate how gene expression predicts cell type!

So can we do it the other way around? Keeping the notation like above, we want to investigate $$ y = \beta_0 + \sum_{g=1}^G \beta^g \cdot x^g + \varepsilon. $$

Now, if \( \beta^g \) is positive, the gene will be a predictor for mESC identity, and the magnitude of this will inform about how important it is for determining the cell type. (I think we can still call this effect size in a meaningful way.)

Let's refer to this as reverse differential expression, and implement it in Stan in this way:

data {

int<lower=0> N;

int<lower=0> G;

matrix[N, G] X;

real y[N];

}

parameters {

real beta0;

vector[G] beta;

real<lower=0> sigma;

}

model {

beta ~ normal(0, 1.);

beta0 ~ normal(0, 1.);

y ~ normal(beta0 + X * beta, sigma);

}After sampling, we can plot the effect sizes of the genes like above.

The results are not exactly stellar. All effect sizes are quite small, and very uncertain! The P-values illustrate this as well.

Well, negative results are also results.

Sparse Reverse Differential Expression

Can we improve this somehow? We can think a little about the expected biology. While biology is complex and intricite, and everything interacts with everything, the results of this way of thinking might not be very actionable. What we expect (or rather hope) is that a small number of key genes determine cell type.

In the statistical sense, it means our prior expectation on the effect sizes is that most of the time they are 0. Allen Riddell wrote an excellent post about this concept and the "Horseshoe prior" here. Based on the code in the post, we can make a sparse version of the reverse DE in the following way

data {

int<lower=0> N;

int<lower=0> G;

matrix[N, G] X;

real y[N];

}

parameters {

real beta0;

vector[G] beta;

vector<lower=0>[G] lambda;

real<lower=0> tau;

real<lower=0> sigma;

}

model {

lambda ~ cauchy(0, 1);

tau ~ cauchy(0, 1);

for (i in 1:G) {

beta[i] ~ normal(0, lambda[i] * tau);

}

beta0 ~ normal(0, 1.);

y ~ normal(beta0 + X * beta, sigma);

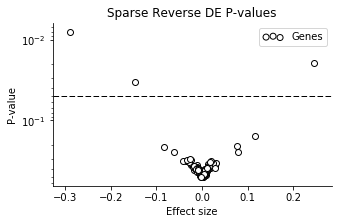

}Again, we perform the sampling and plot the effect sizes.

Now the uncertainty is not very large for most of the genes! A small number of the genes have larger effect sizes, though with pretty large CI's. We can look at the volcano plot to get a clearer summary.

Three of the genes have particularly small P-values, in order: mt-Nd2, Dppa5a, Ckb.

I'm not really expecting very relevant results from this analysis, because the noise models are very crude, and I haven't corrected for any technical factors. But Dppa5a is a well known mESC pluripotency marker, and Ckb is known to be highly abundant in brain (NSC's are neural stem cells). While not very scientific, it's fun that it "makes sense".

I just wanted to explore Bayesian thinking in differential expression, and give some small Stan examples on how to investigate small conceptual ideas of this.

This post is available as a notebook here, with all analysis and code.