K-means in TensorFlow

I have been getting a bit interested in the new fancy TensorFlow package. As a little exercise to figure out roughly how to use it, I figured I could implement a simple model. I chose to implement k-means clustering, since it’s very simple, but a bit different from the regression/classification models in TensorFlows examples.

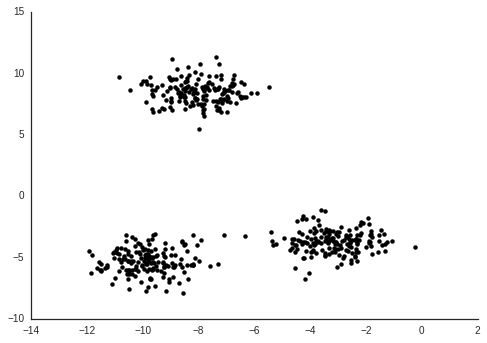

We’ll make some ideal clustered data using scikit-learn.

from sklearn import datasets

Xi, yi = datasets.make_blobs(500, random_state=1111)

plt.scatter(*Xi.T, c='k', lw=0);

`

Now we want to divide these in to clusters. We will use the objective used by Bottou & Bengio 1995,

$$ E(w) = \sum_i \min_k (x_i - w_k)^2. $$

Or in words: the sum of the (squared) distances to the closest prototype $ w_k $.

For our implementation, first we need to put in data. Secondly, we need to define the variables we want to optimize. In this case we want the prototypes $ w $.

import tensorflow as tf

# Number of clusters

k = 3

# Input

Xi = Xi.astype(np.float32)

X = tf.placeholder_with_default(Xi, Xi.shape, name='data')

# Model variables, initiate randomly from data

idx = np.random.choice(range(Xi.shape[0]), k, replace=False)

Wi = Xi[idx].copy()

W = tf.Variable(Wi, name='prototypes')

Now we only need to write out how the objective function value is calculated from the data and the variables.

# Reshape data and prototypes tensors

Xc = tf.concat(2, k * [tf.expand_dims(X, 2)])

Wc = tf.transpose(tf.expand_dims(W, 2), [2, 1, 0])

# Define objective of model

distances = tf.reduce_sum((Xc - Wc) ** 2, 1)

cost = tf.reduce_sum(tf.reduce_min(distances, 1))

After the tensors have been formatted correctly this is very simple. We just calculate the distances from each point to each prototype. Then we sum the smallest of these per data-point.

The final value in cost is represented in such a way that TensorFlow automatically can figure out the gradients for minimizing it with respect to the variables in W.

# Make an object which will minimize cost w.r.t. W

optimizer = tf.train.GradientDescentOptimizer(0.001).minimize(cost)

This is the nice thing with TensorFlow, you don’t need to care about finding gradients for objective functions.

sess = tf.Session()

sess.run(tf.initialize_all_variables())

for i in range(100 + 1):

sess.run(optimizer)

if i % 10 == 0:

print('Iteration {}, cost {}'.format(i, sess.run(cost)))

Iteration 0, cost 21533.69140625

Iteration 10, cost 950.78369140625

Iteration 20, cost 940.453369140625

Iteration 30, cost 940.4503784179688

Iteration 40, cost 940.450439453125

Iteration 50, cost 940.450439453125

Iteration 60, cost 940.450439453125

Iteration 70, cost 940.450439453125

Iteration 80, cost 940.450439453125

Iteration 90, cost 940.450439453125

Iteration 100, cost 940.450439453125

Once we’ve optimized the W we get the number for them. Then we can make cluster labels for the data points by looking at which prototype $ w_k $ is closest to a data point.

W_learned = sess.run(W)

y = sess.run(tf.arg_min(distances, 1))

sess.close()

plt.scatter(*Xi.T, c=y, lw=0, cmap=cm.Greys_r, vmax=k + 0.5, label='data');

plt.scatter(*W_learned.T, c='none', s=100, edgecolor='r', lw=2, label='prototypes');

plt.legend(scatterpoints=3);

This is also available in notebook form here: https://gist.github.com/vals/a01a37b14c4918df7937b30d43327837